Supported mvgam families

Usage

tweedie(link = "log")

student_t(link = "identity")

betar(...)

nb(...)

lognormal(...)

student(...)

bernoulli(...)

beta_binomial(...)

nmix(link = "log")Arguments

- link

a specification for the family link function. At present these cannot be changed

- ...

Arguments to be passed to the mgcv version of the associated functions

Details

mvgam currently supports the following standard observation families:

gaussianwith identity link, for real-valued datapoissonwith log-link, for count dataGammawith log-link, for non-negative real-valued databinomialwith logit-link, for count data when the number of trials is known (and must be supplied)

In addition, the following extended families from the mgcv and brms packages are supported:

betarwith logit-link, for proportional data on(0,1)nbwith log-link, for count datalognormalwith identity-link, for non-negative real-valued databernoulliwith logit-link, for binary databeta_binomialwith logit-link, as forbinomial()but allows for overdispersion

Finally, mvgam supports the three extended families described here:

tweediewith log-link, for count data (power parameterpfixed at1.5)student_t()(orstudent) with identity-link, for real-valued datanmixfor count data with imperfect detection modeled via a State-Space N-Mixture model. The latent states are Poisson (with log link), capturing the 'true' latent abundance, while the observation process is Binomial to account for imperfect detection. The observationformulain these models is used to set up a linear predictor for the detection probability (with logit link). See the example below for a more detailed worked explanation of thenmix()family

Only poisson(), nb(), and tweedie() are available if

using JAGS. All families, apart from tweedie(), are supported if

using Stan.

Note that currently it is not possible to change the default link

functions in mvgam, so any call to change these will be silently ignored

Examples

# \dontrun{

# =============================================================================

# N-mixture Models

# =============================================================================

set.seed(999)

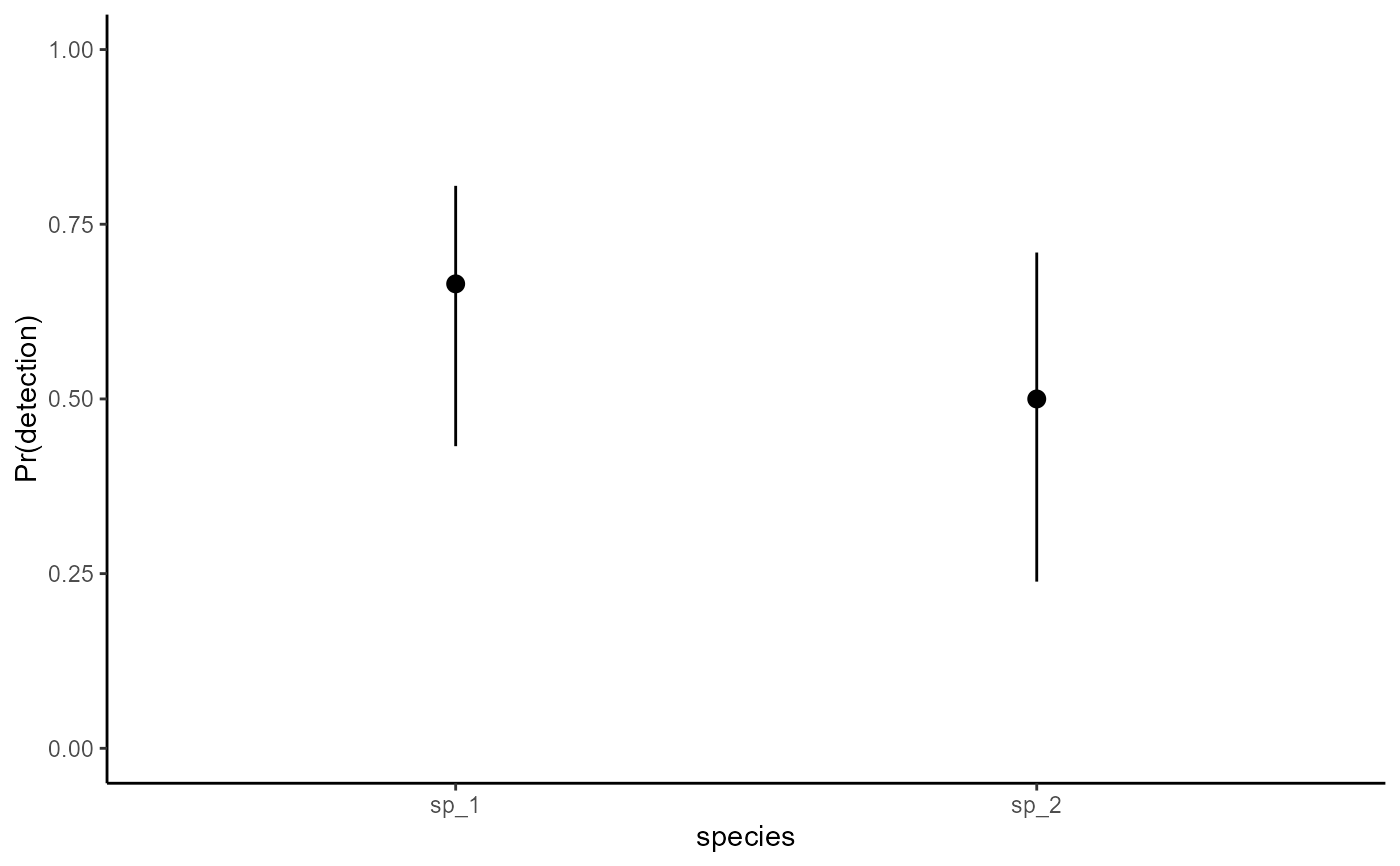

# Simulate observations for species 1, which shows a declining trend and

# 0.7 detection probability

data.frame(

site = 1,

# five replicates per year; six years

replicate = rep(1:5, 6),

time = sort(rep(1:6, 5)),

species = 'sp_1',

# true abundance declines nonlinearly

truth = c(

rep(28, 5),

rep(26, 5),

rep(23, 5),

rep(16, 5),

rep(14, 5),

rep(14, 5)

),

# observations are taken with detection prob = 0.7

obs = c(

rbinom(5, 28, 0.7),

rbinom(5, 26, 0.7),

rbinom(5, 23, 0.7),

rbinom(5, 15, 0.7),

rbinom(5, 14, 0.7),

rbinom(5, 14, 0.7)

)

) %>%

# add 'series' information, which is an identifier of site, replicate

# and species

dplyr::mutate(

series = paste0(

'site_', site,

'_', species,

'_rep_', replicate

),

time = as.numeric(time),

# add a 'cap' variable that defines the maximum latent N to

# marginalize over when estimating latent abundance; in other words

# how large do we realistically think the true abundance could be?

cap = 80

) %>%

dplyr::select(-replicate) -> testdat

# Now add another species that has a different temporal trend and a

# smaller detection probability (0.45 for this species)

testdat <- testdat %>%

dplyr::bind_rows(

data.frame(

site = 1,

replicate = rep(1:5, 6),

time = sort(rep(1:6, 5)),

species = 'sp_2',

truth = c(

rep(4, 5),

rep(7, 5),

rep(15, 5),

rep(16, 5),

rep(19, 5),

rep(18, 5)

),

obs = c(

rbinom(5, 4, 0.45),

rbinom(5, 7, 0.45),

rbinom(5, 15, 0.45),

rbinom(5, 16, 0.45),

rbinom(5, 19, 0.45),

rbinom(5, 18, 0.45)

)

) %>%

dplyr::mutate(

series = paste0(

'site_', site,

'_', species,

'_rep_', replicate

),

time = as.numeric(time),

cap = 50

) %>%

dplyr::select(-replicate)

)

# series identifiers

testdat$species <- factor(

testdat$species,

levels = unique(testdat$species)

)

testdat$series <- factor(

testdat$series,

levels = unique(testdat$series)

)

# The trend_map to state how replicates are structured

testdat %>%

# each unique combination of site*species is a separate process

dplyr::mutate(

trend = as.numeric(factor(paste0(site, species)))

) %>%

dplyr::select(trend, series) %>%

dplyr::distinct() -> trend_map

trend_map

#> trend series

#> 1 1 site_1_sp_1_rep_1

#> 2 1 site_1_sp_1_rep_2

#> 3 1 site_1_sp_1_rep_3

#> 4 1 site_1_sp_1_rep_4

#> 5 1 site_1_sp_1_rep_5

#> 6 2 site_1_sp_2_rep_1

#> 7 2 site_1_sp_2_rep_2

#> 8 2 site_1_sp_2_rep_3

#> 9 2 site_1_sp_2_rep_4

#> 10 2 site_1_sp_2_rep_5

# Fit a model

mod <- mvgam(

# the observation formula sets up linear predictors for

# detection probability on the logit scale

formula = obs ~ species - 1,

# the trend_formula sets up the linear predictors for

# the latent abundance processes on the log scale

trend_formula = ~ s(time, by = trend, k = 4) + species,

# the trend_map takes care of the mapping

trend_map = trend_map,

# nmix() family and data

family = nmix(),

data = testdat,

# priors can be set in the usual way

priors = c(

prior(std_normal(), class = b),

prior(normal(1, 1.5), class = Intercept_trend)

),

chains = 2

)

#> Compiling Stan program using cmdstanr

#>

#> Start sampling

#> Running MCMC with 2 parallel chains...

#>

#> Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 2 finished in 6.1 seconds.

#> Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 1 finished in 6.4 seconds.

#>

#> Both chains finished successfully.

#> Mean chain execution time: 6.2 seconds.

#> Total execution time: 6.5 seconds.

#>

# The usual diagnostics

summary(mod)

#> GAM observation formula:

#> obs ~ species - 1

#> <environment: 0x5582d51e0d50>

#>

#> GAM process formula:

#> ~s(time, by = trend, k = 4) + species

#> <environment: 0x5582d51e0d50>

#>

#> Family:

#> nmix

#>

#> Link function:

#> log

#>

#> Trend model:

#> None

#>

#> N process models:

#> 2

#>

#> N series:

#> 10

#>

#> N timepoints:

#> 6

#>

#> Status:

#> Fitted using Stan

#> 2 chains, each with iter = 1000; warmup = 500; thin = 1

#> Total post-warmup draws = 1000

#>

#> GAM observation model coefficient (beta) estimates:

#> 2.5% 50% 97.5% Rhat n_eff

#> speciessp_1 -0.37 0.710 1.40 1 400

#> speciessp_2 -1.10 0.055 0.86 1 421

#>

#> GAM process model coefficient (beta) estimates:

#> 2.5% 50% 97.5% Rhat n_eff

#> (Intercept)_trend 2.700 3.0000 3.500 1.00 401

#> speciessp_2_trend -1.300 -0.6400 0.140 1.00 401

#> s(time):trendtrend1.1_trend -0.064 0.0150 0.220 1.01 112

#> s(time):trendtrend1.2_trend -0.250 0.0061 0.310 1.00 389

#> s(time):trendtrend1.3_trend -0.450 -0.2500 -0.021 1.01 230

#> s(time):trendtrend2.1_trend -0.280 -0.0140 0.087 1.00 197

#> s(time):trendtrend2.2_trend -0.160 0.0350 0.430 1.01 220

#> s(time):trendtrend2.3_trend 0.051 0.3300 0.640 1.00 541

#>

#> Approximate significance of GAM process smooths:

#> edf Ref.df Chi.sq p-value

#> s(time):seriestrend1 1.208 3 2.713 0.726

#> s(time):seriestrend2 1.807 3 1.453 0.371

#>

#> Stan MCMC diagnostics:

#> ✔ No issues with effective samples per iteration

#> ✔ Rhat looks good for all parameters

#> ✔ No issues with divergences

#> ✔ No issues with maximum tree depth

#>

#> Samples were drawn using sampling(hmc). For each parameter, n_eff is a

#> crude measure of effective sample size, and Rhat is the potential scale

#> reduction factor on split MCMC chains (at convergence, Rhat = 1)

#>

#> Use how_to_cite() to get started describing this model

# Plotting conditional effects

library(ggplot2)

plot_predictions(

mod,

condition = 'species',

type = 'detection'

) +

ylab('Pr(detection)') +

ylim(c(0, 1)) +

theme_classic() +

theme(legend.position = 'none')

# =============================================================================

# Binomial Models

# =============================================================================

# Simulate two time series of Binomial trials

trials <- sample(c(20:25), 50, replace = TRUE)

x <- rnorm(50)

detprob1 <- plogis(-0.5 + 0.9 * x)

detprob2 <- plogis(-0.1 - 0.7 * x)

dat <- rbind(

data.frame(

y = rbinom(n = 50, size = trials, prob = detprob1),

time = 1:50,

series = 'series1',

x = x,

ntrials = trials

),

data.frame(

y = rbinom(n = 50, size = trials, prob = detprob2),

time = 1:50,

series = 'series2',

x = x,

ntrials = trials

)

)

dat <- dplyr::mutate(dat, series = as.factor(series))

dat <- dplyr::arrange(dat, time, series)

# Fit a model using the binomial() family; must specify observations

# and number of trials in the cbind() wrapper

mod <- mvgam(

cbind(y, ntrials) ~ series + s(x, by = series),

family = binomial(),

data = dat

)

#> Compiling Stan program using cmdstanr

#>

#> Start sampling

#> Running MCMC with 4 chains, at most 3 in parallel...

#>

#> Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 finished in 1.4 seconds.

#> Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 1 finished in 2.0 seconds.

#> Chain 3 finished in 1.9 seconds.

#> Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 4 finished in 1.7 seconds.

#>

#> All 4 chains finished successfully.

#> Mean chain execution time: 1.7 seconds.

#> Total execution time: 3.3 seconds.

#>

summary(mod)

#> GAM formula:

#> cbind(y, ntrials) ~ series + s(x, by = series)

#> <environment: 0x5582d51e0d50>

#>

#> Family:

#> binomial

#>

#> Link function:

#> logit

#>

#> Trend model:

#> None

#>

#> N series:

#> 2

#>

#> N timepoints:

#> 50

#>

#> Status:

#> Fitted using Stan

#> 4 chains, each with iter = 1000; warmup = 500; thin = 1

#> Total post-warmup draws = 2000

#>

#> GAM coefficient (beta) estimates:

#> 2.5% 50% 97.5% Rhat n_eff

#> (Intercept) -0.440 -0.31000 -0.170 1.01 1077

#> seriesseries2 -0.066 0.13000 0.310 1.00 1090

#> s(x):seriesseries1.1 -0.300 -0.00910 0.330 1.01 375

#> s(x):seriesseries1.2 -0.950 -0.02900 0.200 1.04 77

#> s(x):seriesseries1.3 -0.640 -0.01400 0.082 1.04 75

#> s(x):seriesseries1.4 -0.300 -0.00210 0.100 1.04 84

#> s(x):seriesseries1.5 -0.230 -0.00098 0.067 1.04 86

#> s(x):seriesseries1.6 -0.200 -0.00044 0.078 1.03 97

#> s(x):seriesseries1.7 -0.310 -0.00210 0.100 1.04 85

#> s(x):seriesseries1.8 -2.400 0.00110 0.900 1.04 83

#> s(x):seriesseries1.9 0.100 0.77000 1.200 1.01 148

#> s(x):seriesseries2.1 -0.210 0.03800 0.420 1.00 771

#> s(x):seriesseries2.2 -0.260 0.02000 0.490 1.00 386

#> s(x):seriesseries2.3 -0.130 -0.00740 0.160 1.00 466

#> s(x):seriesseries2.4 -0.120 -0.01000 0.120 1.00 439

#> s(x):seriesseries2.5 -0.088 -0.01100 0.078 1.00 494

#> s(x):seriesseries2.6 -0.096 -0.01100 0.085 1.00 440

#> s(x):seriesseries2.7 -0.120 -0.01400 0.120 1.00 426

#> s(x):seriesseries2.8 -1.200 -0.16000 0.980 1.00 428

#> s(x):seriesseries2.9 -1.100 -0.62000 -0.280 1.00 738

#>

#> Approximate significance of GAM smooths:

#> edf Ref.df Chi.sq p-value

#> s(x):seriesseries1 1.534 9 33.91 0.03272 *

#> s(x):seriesseries2 1.631 9 18.06 0.00133 **

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#> Stan MCMC diagnostics:

#> ✔ No issues with effective samples per iteration

#> ✔ Rhat looks good for all parameters

#> ✔ No issues with divergences

#> ✔ No issues with maximum tree depth

#>

#> Samples were drawn using sampling(hmc). For each parameter, n_eff is a

#> crude measure of effective sample size, and Rhat is the potential scale

#> reduction factor on split MCMC chains (at convergence, Rhat = 1)

#>

#> Use how_to_cite() to get started describing this model

# }

# =============================================================================

# Binomial Models

# =============================================================================

# Simulate two time series of Binomial trials

trials <- sample(c(20:25), 50, replace = TRUE)

x <- rnorm(50)

detprob1 <- plogis(-0.5 + 0.9 * x)

detprob2 <- plogis(-0.1 - 0.7 * x)

dat <- rbind(

data.frame(

y = rbinom(n = 50, size = trials, prob = detprob1),

time = 1:50,

series = 'series1',

x = x,

ntrials = trials

),

data.frame(

y = rbinom(n = 50, size = trials, prob = detprob2),

time = 1:50,

series = 'series2',

x = x,

ntrials = trials

)

)

dat <- dplyr::mutate(dat, series = as.factor(series))

dat <- dplyr::arrange(dat, time, series)

# Fit a model using the binomial() family; must specify observations

# and number of trials in the cbind() wrapper

mod <- mvgam(

cbind(y, ntrials) ~ series + s(x, by = series),

family = binomial(),

data = dat

)

#> Compiling Stan program using cmdstanr

#>

#> Start sampling

#> Running MCMC with 4 chains, at most 3 in parallel...

#>

#> Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 2 finished in 1.4 seconds.

#> Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

#> Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 1 finished in 2.0 seconds.

#> Chain 3 finished in 1.9 seconds.

#> Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

#> Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

#> Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

#> Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

#> Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

#> Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

#> Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

#> Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

#> Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

#> Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

#> Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

#> Chain 4 finished in 1.7 seconds.

#>

#> All 4 chains finished successfully.

#> Mean chain execution time: 1.7 seconds.

#> Total execution time: 3.3 seconds.

#>

summary(mod)

#> GAM formula:

#> cbind(y, ntrials) ~ series + s(x, by = series)

#> <environment: 0x5582d51e0d50>

#>

#> Family:

#> binomial

#>

#> Link function:

#> logit

#>

#> Trend model:

#> None

#>

#> N series:

#> 2

#>

#> N timepoints:

#> 50

#>

#> Status:

#> Fitted using Stan

#> 4 chains, each with iter = 1000; warmup = 500; thin = 1

#> Total post-warmup draws = 2000

#>

#> GAM coefficient (beta) estimates:

#> 2.5% 50% 97.5% Rhat n_eff

#> (Intercept) -0.440 -0.31000 -0.170 1.01 1077

#> seriesseries2 -0.066 0.13000 0.310 1.00 1090

#> s(x):seriesseries1.1 -0.300 -0.00910 0.330 1.01 375

#> s(x):seriesseries1.2 -0.950 -0.02900 0.200 1.04 77

#> s(x):seriesseries1.3 -0.640 -0.01400 0.082 1.04 75

#> s(x):seriesseries1.4 -0.300 -0.00210 0.100 1.04 84

#> s(x):seriesseries1.5 -0.230 -0.00098 0.067 1.04 86

#> s(x):seriesseries1.6 -0.200 -0.00044 0.078 1.03 97

#> s(x):seriesseries1.7 -0.310 -0.00210 0.100 1.04 85

#> s(x):seriesseries1.8 -2.400 0.00110 0.900 1.04 83

#> s(x):seriesseries1.9 0.100 0.77000 1.200 1.01 148

#> s(x):seriesseries2.1 -0.210 0.03800 0.420 1.00 771

#> s(x):seriesseries2.2 -0.260 0.02000 0.490 1.00 386

#> s(x):seriesseries2.3 -0.130 -0.00740 0.160 1.00 466

#> s(x):seriesseries2.4 -0.120 -0.01000 0.120 1.00 439

#> s(x):seriesseries2.5 -0.088 -0.01100 0.078 1.00 494

#> s(x):seriesseries2.6 -0.096 -0.01100 0.085 1.00 440

#> s(x):seriesseries2.7 -0.120 -0.01400 0.120 1.00 426

#> s(x):seriesseries2.8 -1.200 -0.16000 0.980 1.00 428

#> s(x):seriesseries2.9 -1.100 -0.62000 -0.280 1.00 738

#>

#> Approximate significance of GAM smooths:

#> edf Ref.df Chi.sq p-value

#> s(x):seriesseries1 1.534 9 33.91 0.03272 *

#> s(x):seriesseries2 1.631 9 18.06 0.00133 **

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#> Stan MCMC diagnostics:

#> ✔ No issues with effective samples per iteration

#> ✔ Rhat looks good for all parameters

#> ✔ No issues with divergences

#> ✔ No issues with maximum tree depth

#>

#> Samples were drawn using sampling(hmc). For each parameter, n_eff is a

#> crude measure of effective sample size, and Rhat is the potential scale

#> reduction factor on split MCMC chains (at convergence, Rhat = 1)

#>

#> Use how_to_cite() to get started describing this model

# }